Well, Today I thought to bring you something different.

Docker is the most popular platform in today to run applications easily. Docker helps us to deploy our applications more easily with less involvements of configurations. Main concept behind the docker is

virtualization. Anyway, this is some kind of large topic and like you, I'm still learning these stuff. However I would like to share my experience on this technology with you. I hope this will help someone who willing to take the first step of the docker technology. Without further, let's dive into the docker sea !. So as I say, Lets start docker from basic. 😉

Why we need docker?

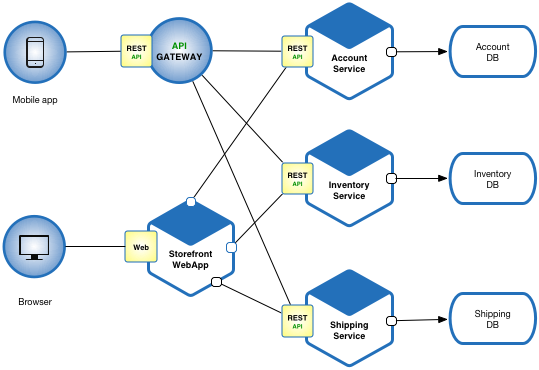

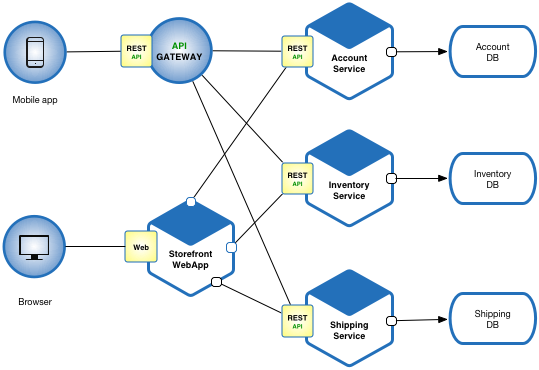

The requirement of docker is started with the introduction of micro service architecture. According to the

microservice architecture, we should split/divide our application into different individual services. So that, unlike 3 tier (monolithic) architecture, we have to deploy and run several services.

|

| Example system with microservice architecture | source: https://microservices.io/patterns/microservices.html |

|

|

|

On the other hand, in order to get the real advantage of microservice architecture, we have to maintain the scalability. Docker helps us to achieve these goals easily.

How does this works?

As I said earlier, concept behind the docker is virtualization. We all know that we can run multiple virtual machines in our PC using some special software like

Virtual Box or

VMWare. What if we deploy our services on each virtual machine. So that we can deploy our service individually and independently. Yes, we can.

|

| Services deployed in different virtual machines |

Then we can achieve our goal even without docker. But wait! Did you forget something? What do you think about the overall performance of this system when you deploy it in this way? Don't forget that even these PCs are virtual machines, they consume computational resources equally like a normal PC. So what do you think? If we consider about above diagram, there are 4 virtual machines running in our PC. So we have divided our computer's computational resources into 5 computers.

What if we can simply remove the operating system (Ubuntu 16.4 in our case) from each virtual PC. Then can save lots of computational resources because operating systems takes lots of computational resources.

|

| Services deployed without operating system |

But is it possible? Yes It is possible. This is place that Docker comes into play. We can put our services into docker containers instead of putting in virtual machines. In that case we can save lots of computational resources since docker containers does not take much resources as operating systems. Now our services can work individually and independently.

So docker basically give us a space to run our applications in containerized manner. It gives us a grate oppertunity to increase performance and make the system scalable. There are some softwares to handle these containers called container orchestration softwares. I will talk about them in another post.

This is how the actual docker system works.

What are the steps in order to start a docker container?

Well, I hope to make another post about this topic. Anyway I will put basic steps here.

- First you need to build your application.

- Install docker in your PC.

- Create a Dockerfile (A file with instruction for the docker engine to build our docker image) for our application.

- Build docker image with Dockerfile. (If you have a docker hub account, you can push your docker image to that)

- Deploy docker image in a docker container.

What is layered structure of Docker?

You may have herd that docker is using a layered structure on it's images. It shares each layers of images with each other.

|

| Layers of docker images |

You can see in the diagram that we have created two docker images of two java based applications. As both of them based on java, they are depend on docker image of OpenJDK. So when you want to deploy your applications, you don't need to download OpenJDK image from docker hub for each application. Instead you can just download once and use it for both applications as a shared component. So it will save the storage of computer and network bandwidth.

Advantages of using Docker

- As I earlier said, it saves lots of computational resources of the host PC.

- Once you build a docker image, it would run in any other pc which docker installed. So no need to be worry about configuring every pc which you going to deploy your application.

- Docker is available for every platforms including Windows, Linux and Mac.

- Since docker use shared layered structure, it saves storage of the host PC.

It came to the end of this post. I think that I have written some informative content for some who willing to learn about docker. Feel free to ask any question on comments section. As a learner, there might be some places to improve in this post. If there are things to change or add to this post, put those also in the comments so that it will gives me and other readers a grade advantage to learn more.

I hope to make another post with a practical session of docker. Till then, good bye!

Comments

Post a Comment